OpenCV Tracking

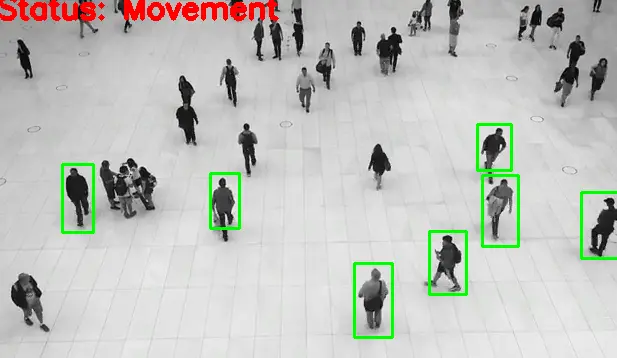

This demonstration aims to learn how to create a very basic and simple motion detection and tracking system using Python and OpenCV. We achieve to track every person with a rectangular bounding box at the end of this article.

Create Motion Detection and Tracking System Using Python and OpenCV

First, we need to read two frames from the CAP instance.

ret, F1 = CAP.read()

Similarly, we are going to read the second frame.

ret, F2 = CAP.read()

Now we will declare a variable called DF and use the absdiff() function. The absdiff() helps find the absolute difference between frames, the first F1 and the second F2.

while CAP.isOpened():

if ret == False:

print(ret)

break

DF = cv2.absdiff(F1, F2)

Convert this difference into a grayscale mode using the cvtColor() method. The first parameter will be DF.

The second argument will be COLOR_BGR2GRAY, which will help convert frame color BGR to grayscale mode; why are we finding out the grayscale mode?

Because we will find the contour in the later stages, it is easier to find the contours in grayscale mode than in the colored mode.

Gray_Scale = cv2.cvtColor(DF, cv2.COLOR_BGR2GRAY)

Once we have grayscale mode, we need to blur our grayscale frame using the GaussianBlur() method. It takes a few parameters; the first is Gray_Scale, the second parameter will be the kernel size 5x5, and the third parameter will be the Sigma X value.

BL = cv2.GaussianBlur(Gray_Scale, (5, 5), 0)

We need to determine the threshold using the threshold() method. It returns two objects; we define _ because we do not need the first variable and then the second variable will be thresh.

In the first parameter, we will pass our blurred image as the source, and then the second parameter will be the threshold value of 20. The maximum threshold value will be 255; the type will be THRESH_BINARY.

_, thresh = cv2.threshold(BL, 20, 255, cv2.THRESH_BINARY)

We need to dilate the thresholded image to fill in all the holes; this will help us find better contours. The dilate() method takes a few parameters; the first parameter will be the defined threshold, and the second parameter will be the kernel size, but we are passing it None.

The third argument is the number of iterations as 3. If it does not work, you can increase or decrease the number of iterations.

DL = cv2.dilate(thresh, None, iterations=3)

In the next step, we will find out the contour, and the findContours() method gives us two results; one is the contours, and the other is the hierarchy, but we’re not going to use the second result. We are going to find the contours on the dilated image.

So we are passing dilated image in the first parameter, and the next will be the RETR_TREE mode which is most commonly used. The next parameter will be the CHAIN_APPROX_SIMPLE method.

CTS, _ = cv2.findContours(DL, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

In the next step, we want to draw the rectangles, so we will iterate over all the contours using the for loop. The CTS is a list, and we are iterating over this list, so the first step will be to save all the coordinates of the contours using the boundingRect() method.

In the next step, we will find out the contour area, and if this area is less than a certain value, we will not draw a rectangle. Inside the for loop, we will define that if the contour area is less than 700, we will continue the iteration; otherwise, draw the rectangle.

To draw the rectangle, we need to use the cv2.rectangle() method, and the first argument here will be the source which will be F1; the second parameter will be point 1 (x,y). The third parameter will be point 2, the next parameter will be a tuple as a color value, and the next parameter will be the thickness.

for CT in CTS:

(x, y, w, h) = cv2.boundingRect(CT)

if cv2.contourArea(CT) < 900:

continue

cv2.rectangle(F1, (x, y), (x + w, y + h), (0, 255, 0), 2)

We will place some text on the image if some movement is observed. We will use the cv2.putText() method; this method will take F1, the second will be the text, and the next argument parameter will be the origin where we want to put this text.

The next parameter is the font-face FONT_HERSHEY_SIMPLEX; the next parameter will be the font scale. The next will be the color of the font; then, the last parameter will be the thickness of the text.

cv2.putText(

F1,

"Status: {}".format("Movement"),

(10, 20),

cv2.FONT_HERSHEY_SIMPLEX,

1,

(0, 0, 255),

3,

)

Now we will write some code outside the loop. First, we will write output images to save the output and then display F1, the result after applying the contour.

In the next line, we are reading the new frame in the variable F2, and before reading the new frame, we assign the value of F2 to the F1. This way, we are reading and finding the difference between the two frames.

OP.write(IMG)

cv2.imshow("feed", F1)

F1 = F2

ret, F2 = CAP.read()

Complete source code:

import cv2

import numpy as np

CAP = cv2.VideoCapture("input.avi")

FR_W = int(CAP.get(cv2.CAP_PROP_FRAME_WIDTH))

FR_H = int(CAP.get(cv2.CAP_PROP_FRAME_HEIGHT))

FRC = cv2.VideoWriter_fourcc("X", "V", "I", "D")

OP = cv2.VideoWriter("output.avi", FRC, 5.0, (1280, 720))

ret, F1 = CAP.read()

ret, F2 = CAP.read()

print(F1.shape)

while CAP.isOpened():

if ret == False:

print(ret)

break

DF = cv2.absdiff(F1, F2)

Gray_Scale = cv2.cvtColor(DF, cv2.COLOR_BGR2GRAY)

BL = cv2.GaussianBlur(Gray_Scale, (5, 5), 0)

_, thresh = cv2.threshold(BL, 20, 255, cv2.THRESH_BINARY)

DL = cv2.dilate(thresh, None, iterations=3)

CTS, _ = cv2.findContours(DL, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

for CT in CTS:

(x, y, w, h) = cv2.boundingRect(CT)

if cv2.contourArea(CT) < 900:

continue

cv2.rectangle(F1, (x, y), (x + w, y + h), (0, 255, 0), 2)

cv2.putText(

F1,

"Status: {}".format("Movement"),

(10, 20),

cv2.FONT_HERSHEY_SIMPLEX,

1,

(0, 0, 255),

3,

)

IMG = cv2.resize(F1, (1280, 720))

OP.write(IMG)

cv2.imshow("feed", F1)

F1 = F2

ret, F2 = CAP.read()

if cv2.waitKey(40) == 27:

break

cv2.destroyAllWindows()

CAP.release()

OP.release()

We can see the status is showing movement because all the people are moving. We can also look at rectangles that are drawn around the moving person.

![]()

Hello! I am Salman Bin Mehmood(Baum), a software developer and I help organizations, address complex problems. My expertise lies within back-end, data science and machine learning. I am a lifelong learner, currently working on metaverse, and enrolled in a course building an AI application with python. I love solving problems and developing bug-free software for people. I write content related to python and hot Technologies.

LinkedIn