How to Convert Spark List to Pandas Dataframe

-

Use the

topandas()Methods to Convert Spark List to Pandas Dataframe -

Use the

parallelize()Function to Convert Spark List to Python Pandas Dataframe - Conclusion

This article will convert the Spark row list into a Pandas dataframe.

Use the topandas() Methods to Convert Spark List to Pandas Dataframe

Syntax of createDataframe():

current_session.createDataFrame(data, schema=None, samplingRatio=None, verifySchema=True)

Parameters:

data: This parameter contains data or dataset in the form of SQL/MySQL.schema: This contains the column name for the dataframe.sampling Ratio(float): This is the ratio of the row.verify Schema(bool): This is of Boolean type for checking the datatypes of the schema.

The above parameters return the spark dataframe object. An example of the above methods is as follows.

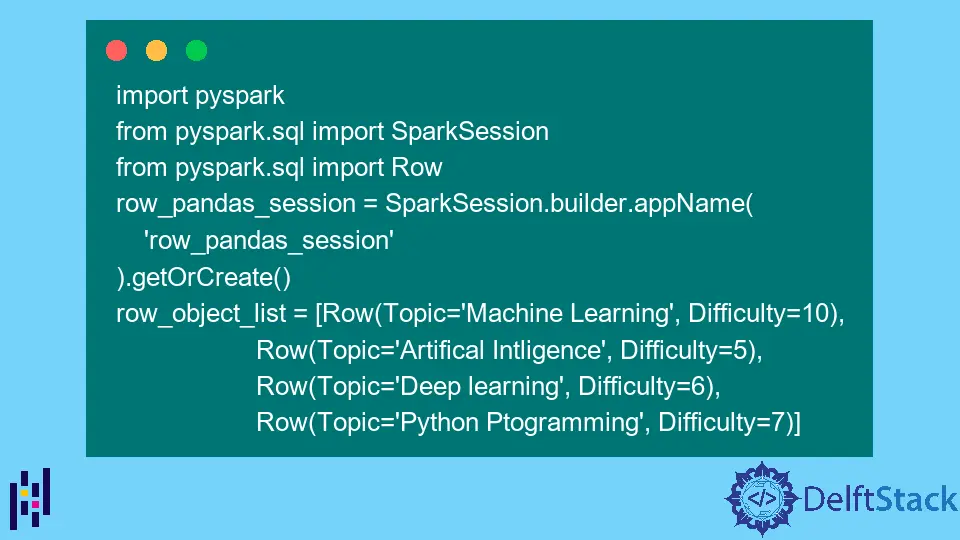

We first pass the row list data using this function and create a spark dataframe. Then we import PySpark and other related modules.

Code:

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql import Row

row_pandas_session = SparkSession.builder.appName("row_pandas_session").getOrCreate()

row_object_list = [

Row(Topic="Machine Learning", Difficulty=10),

Row(Topic="Artifical Intligence", Difficulty=5),

Row(Topic="Deep learning", Difficulty=6),

Row(Topic="Python Ptogramming", Difficulty=7),

]

To create a spark dataframe, use the createDataframe() function.

df = row_pandas_session.createDataFrame(row_object_list)

We use the show() function to display the created spark dataframe.

df.show()

Output:

+--------------------+----------+

| Topic|Difficulty|

+--------------------+----------+

| Machine Learning| 10|

|Artifical Intligence| 5|

| Deep learning| 6|

| Python Ptogramming| 7|

+--------------------+----------+

Finally, we use the topandas() function to convert the spark dataframe to a Pandas dataframe. Then, we print the Pandas dataframe.

pandas_df = df.toPandas()

pandas_df

Output:

Topic Difficulty

0 Machine Learning 10

1 Artifical Intligence 5

2 Deep learning 6

3 Python Ptogramming 7

Complete code:

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql import Row

row_pandas_session = SparkSession.builder.appName("row_pandas_session").getOrCreate()

row_object_list = [

Row(Topic="Machine Learning", Difficulty=10),

Row(Topic="Artifical Intligence", Difficulty=5),

Row(Topic="Deep learning", Difficulty=6),

Row(Topic="Python Ptogramming", Difficulty=7),

]

df = row_pandas_session.createDataFrame(row_object_list)

df.show()

pandas_df = df.toPandas()

pandas_df

Use the parallelize() Function to Convert Spark List to Python Pandas Dataframe

To create an RDD, we use the parallelize() function. Parallelize refers to copying the elements of a predefined collection to a distributed dataset on which we can perform parallel operations.

Syntax:

sc.parallelize(data,numSlices)

Where:

sc: Spark Context Object

Parameters:

data: This is the data or dataset made for RDDnumSlices: It specifies the number of partitions. This is an optional parameter.

The code below is the same as in the previous section.

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql import Row

row_pandas_session = SparkSession.builder.appName("row_pandas_session").getOrCreate()

row_object_list = [

Row(Topic="Machine Learning", Difficulty=10),

Row(Topic="Artifical Intligence", Difficulty=5),

Row(Topic="Deep learning", Difficulty=6),

Row(Topic="Python Ptogramming", Difficulty=7),

]

Now, we create a dataframe using RDD.

rdd = row_pandas_session.sparkContext.parallelize(row_object_list)

rdd

Output:

ParallelCollectionRDD[11] at readRDDFromFile at PythonRDD.scala:274

Now, we create the dataframe, as shown below.

df = row_pandas_session.createDataFrame(rdd)

df.show()

Output:

+--------------------+----------+

| Topic|Difficulty|

+--------------------+----------+

| Machine Learning| 10|

|Artifical Intligence| 5|

| Deep learning| 6|

| Python Ptogramming| 7|

+--------------------+----------+

Finally, convert it into a Pandas dataframe.

df2 = df.toPandas()

print(df2)

Output:

Topic Difficulty

0 Machine Learning 10

1 Artifical Intligence 5

2 Deep learning 6

3 Python Ptogramming 7

Conclusion

In this article, we used two methods. We first use the createDataframe() function, followed by the topandas() function to convert the Spark list to a Pandas dataframe.

The second method we used is the parrallelize() function. In this method, first, we created the Spark dataframe using the same function as the previous and then used RDD to parallelize and create the Spark dataframe.

The Spark dataframe is then converted to a Pandas dataframe using the topandas() function.