Derivative of ReLU Function in Python

-

Implement the

ReLUFunction in Python -

Derivative of

ReLUFunction in Python Using the Formula-Based Approach -

Derivative of the

ReLUFunction in Python Using theNumPyLibrary -

Derivative of the

ReLUFunction in Python Using the Lambda Function - Conclusion

The Rectified Linear Unit (ReLU) function stands as a pivotal element in enhancing model performance and computational efficiency. This article delves into the intricacies of implementing the ReLU function and its derivative in Python, a programming language renowned for its pivotal role in data science and machine learning.

We embark on a journey that starts with the basic implementation of the ReLU function, gradually advancing to its derivative forms using various methodologies, including formula-based approaches, utilization of the NumPy library, and the innovative application of lambda functions.

Implement the ReLU Function in Python

As a mathematical function, we can define the ReLU function as follows:

f(x) = max(0,x)

This function is linear about x and outputs zero for all negative values.

The following pseudo-code represents the ReLU function.

if input > 0:

return input

else:

return 0

As the above pseudo-code, we can build our implementation of the ReLU function as follows:

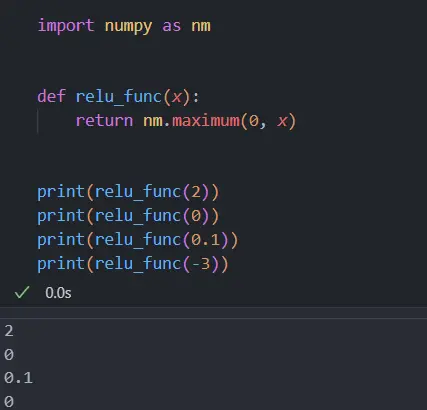

import numpy as nm

def relu_func(x):

return nm.maximum(0, x)

print(relu_func(2))

print(relu_func(0))

print(relu_func(0.1))

print(relu_func(-3))

Considering this example, it defined function relu_func with parameter x. This function returns output considering the ReLU function.

We passed a single integer at once to the relu_func function as an argument.

The maximum() function returns the highest value. If the integer is greater than 0, it will print the same integer as the input; if not, it prints zero.

So, the ReLU function we have implemented in the above code will work with any single integer and also with numpy arrays.

We can get the output as follows:

Derivative of ReLU Function in Python Using the Formula-Based Approach

The derivative of the ReLU function, otherwise, calls the gradient of the ReLU. The derivative of the function is the slope.

If we create a graph, for example, y= ReLu(x), and x is greater than zero, the gradient is 1.

If x is less than zero, the gradient is 0. If x = 0, the derivative does not be extant.

The mathematical derivative of the ReLU function can be defined as follows:

f'(x) = 1, x >= 0

= 0, x < 0

We can apply the derivative of the ReLU function to the graph. So, let’s look at the following example.

import numpy as np

import matplotlib.pyplot as plt

def relu(x):

return max(0, x)

def relu_derivative(x):

return 1 if x > 0 else 0

# Generate a range of values

x_values = np.linspace(-2, 2, 400)

relu_values = [relu(x) for x in x_values]

relu_derivative_values = [relu_derivative(x) for x in x_values]

# Plotting

plt.plot(x_values, relu_values, label="ReLU Function")

plt.plot(x_values, relu_derivative_values, label="ReLU Derivative", linestyle="dashed")

plt.xlabel("x")

plt.ylabel("Function values")

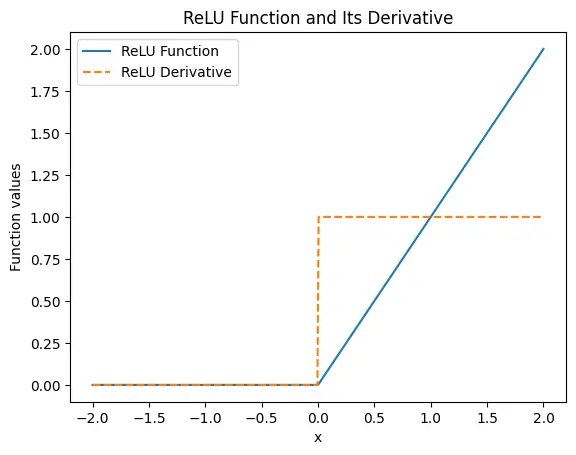

plt.title("ReLU Function and Its Derivative")

plt.legend()

plt.show()

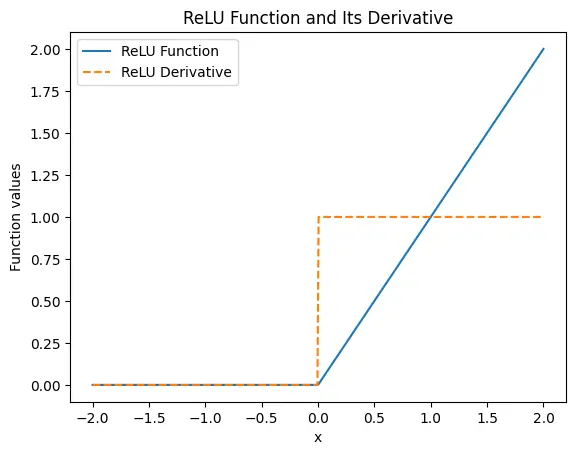

We start by defining two functions: relu(x) for the ReLU function, and relu_derivative(x) for its derivative. Each function accepts a single argument, x, representing the input value.

The relu function employs Python’s max function to return either 0 or x, whichever is greater, thus capturing the essence of the ReLU function. In contrast, relu_derivative is defined to return 1 if x is positive and 0 otherwise, in line with the mathematical derivative of ReLU.

To visually represent these functions, we create a range of values using NumPy’s linspace method and apply our relu and relu_derivative functions to these values. We then plot these values using Matplotlib, resulting in a graph that effectively illustrates the behavior of both the ReLU function and its derivative.

The ReLU function graph shows a linear increase for positive values while remaining at zero for negative values. Its derivative, depicted as a dashed line, transitions from 0 to 1 at x=0.

Output:

Derivative of the ReLU Function in Python Using the NumPy Library

NumPy, a core library for numerical computations in Python, provides vectorized operations, making it an ideal choice for implementing ReLU and its derivative efficiently.

Using NumPy, the ReLU function and its derivative can be implemented as follows:

import numpy as np

def relu(x):

return np.maximum(0, x)

def relu_derivative(x):

return np.where(x > 0, 1, 0)

np.maximum(0, x): Computes the element-wise maximum of array elements and zero.np.where(x > 0, 1, 0): Returns 1 for elements wherexis greater than 0, otherwise 0.

Here’s a complete code example that demonstrates the implementation and plots the ReLU function alongside its derivative.

import numpy as np

import matplotlib.pyplot as plt

def relu(x):

return np.maximum(x, 0)

def relu_derivative(x):

return np.where(x > 0, 1, 0)

# Generating values

x_values = np.linspace(-2, 2, 400)

relu_values = relu(x_values)

relu_derivative_values = relu_derivative(x_values)

# Plotting

plt.plot(x_values, relu_values, label="ReLU Function")

plt.plot(x_values, relu_derivative_values, label="ReLU Derivative", linestyle="dashed")

plt.xlabel("x")

plt.ylabel("Function values")

plt.title("ReLU Function and Its Derivative")

plt.legend()

plt.show()

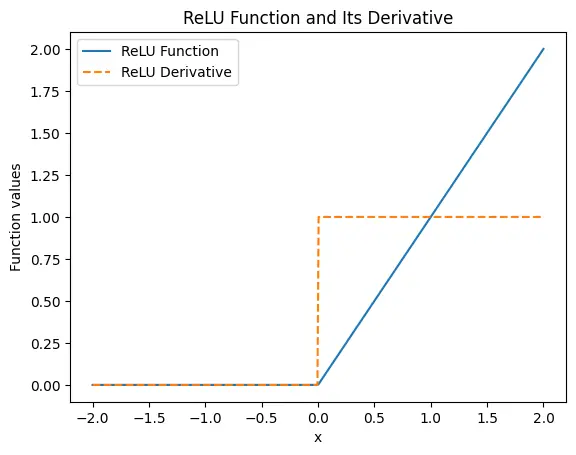

Our implementation leverages NumPy’s vectorized operations to efficiently handle array inputs. We define two functions: relu(x), using np.maximum(0, x) to compute the element-wise maximum of array elements and zero, and relu_derivative(x), using np.where(x > 0, 1, 0) to return 1 for elements where x is greater than 0, and 0 otherwise.

This efficient use of NumPy’s functions allows for fast computations over a range of values.

To visually demonstrate these concepts, we plot the ReLU function and its derivative using Matplotlib. The code generates an array of values using np.linspace(-2, 2, 400), applies the relu and relu_derivative functions to these values, and then plots them.

This results in a graph where the ReLU function is depicted as a continuous line, increasing linearly for positive x and remaining zero for negative x. Its derivative is shown as a dashed line, which is 0 for negative x and 1 for positive x.

Output:

Derivative of the ReLU Function in Python Using the Lambda Function

In Python, lambda functions offer a concise way to implement such functionality. Here, we focus on using lambda functions to define ReLU and its derivative, illustrating their use in neural networks, particularly in the backpropagation process for updating weights.

We define the ReLU function and its derivative using lambda functions as follows:

def relu(x):

return max(0, x)

def relu_derivative(x):

return 1 if x > 0 else 0

Below is a complete Python script that demonstrates the implementation and plots both the ReLU function and its derivative:

import numpy as np

import matplotlib.pyplot as plt

def relu(x):

return max(0, x)

def relu_derivative(x):

return 1 if x > 0 else 0

# Generating values

x_values = np.linspace(-2, 2, 400)

relu_values = [relu(x) for x in x_values]

relu_derivative_values = [relu_derivative(x) for x in x_values]

# Plotting

plt.plot(x_values, relu_values, label="ReLU Function")

plt.plot(x_values, relu_derivative_values, label="ReLU Derivative", linestyle="dashed")

plt.xlabel("x")

plt.ylabel("Function values")

plt.title("ReLU Function and Its Derivative")

plt.legend()

plt.show()

In our code analysis, we explore the use of lambda functions in Python to implement the ReLU activation function and its derivative. We define the ReLU function as relu = lambda x: max(0, x) and its derivative as relu_derivative = lambda x: 1 if x > 0 else 0, both succinctly capturing the core logic in a readable and efficient manner.

We then proceed to evaluate the lambda functions over a range of values, utilizing NumPy’s linspace to generate an array of input values. For each input, we compute the corresponding ReLU and derivative values, which are then plotted using Matplotlib.

This visual representation is crucial for understanding how these functions behave across different inputs, a fundamental aspect in the context of neural networks, especially during the backpropagation phase for updating weights.

Output:

Conclusion

This article indicates how to implement the ReLU function in Python and mainly discusses how to implement the ReLU derivative function. The ReLU function is used in deep learning frequently.

But it has some issues. For instance, if the input value is less than 0, the output will be 0.

Therefore, the neural network is unable to continue its work. As a solution for this, it mostly uses the Leaky ReLU function.

Nimesha is a Full-stack Software Engineer for more than five years, he loves technology, as technology has the power to solve our many problems within just a minute. He have been contributing to various projects over the last 5+ years and working with almost all the so-called 03 tiers(DB, M-Tier, and Client). Recently, he has started working with DevOps technologies such as Azure administration, Kubernetes, Terraform automation, and Bash scripting as well.