How to Fix NLTK Stemming Anomalies in Python

-

Fix

NLTKStemming Anomalies in Python -

Fixes of the Stemming Anomalies in the

PorterStemmerModule ofNLTKin Python -

Use of Lemmatizers Over Stemmers in

NLTKin Python - Conclusion

Stemming, as the name suggests, is the method of reducing words to their root forms. For example, the words like happiness, happily, and happier all break down to the root word happy.

In Python, we can do this with the help of various modules provided by the NLTK library of Python, but sometimes, you might not get the results you expected. For example, the fairly is reduced to fairli and not fair PorterStemmer module of the NLTK library.

This article will discuss why such differences occur and how to fix them to get the root word we want. So grab a coffee and read on!

Fix NLTK Stemming Anomalies in Python

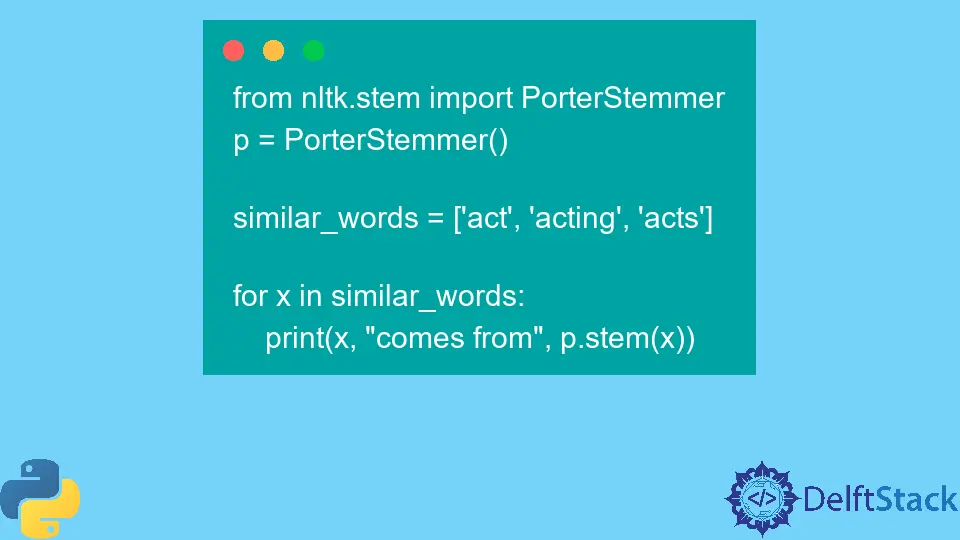

Although the NLTK library of Python has several stemmers, the most commonly used one is the PorterStemmer. Here is an example of how the PorterStemmer works.

Let us first understand what’s happening in this code.

We first import PorterStemmer from the nltk library and then create an instance of it. Now, we can use this instance to stem the given list of words with the help of the stem() function provided in the nltk library.

from nltk.stem import PorterStemmer

p = PorterStemmer()

similar_words = ["act", "acting", "acts"]

for x in similar_words:

print(x, "comes from", p.stem(x))

Output:

act comes from act

acting comes from act

acts comes from act

All the words on this list are reduced to the root word act with the help of the PorterStemmer module. But this is not how this module works all the time.

Here is another example.

from nltk.stem import PorterStemmer

p = PorterStemmer()

print(p.stem("loudly"))

Output:

'loudli'

You can see that we get the output as loudli and not loud this time.

Wonder why this happens. And, more importantly, how to fix this?

Fixes of the Stemming Anomalies in the PorterStemmer Module of NLTK in Python

The PorterStemmer module works in five phases, each with its own rules and procedures. Moreover, the results it generally gives are based on the English language.

This means that even though the word loudi is not what we wanted, it is the correctly stemmed word per the rules of the PorterStemmer module.

But there is some good news - not all stemmers of the NLTK module work similarly. Let us stem the word loudly with the help of SnowballStemmer this time.

Note that here, we will have to pass a language as a parameter to the SnowballStemmer() function.

from nltk.stem import SnowballStemmer

s = SnowballStemmer("english")

print(s.stem("loudly"))

Output:

'loud'

You can see that we get the desired output this time because the SnowballStemmer certainly uses a different set of rules than the PorterStemmer. Now here is something interesting.

Try stemming the word actor using both of these modules. This is done below.

from nltk.stem import SnowballStemmer

from nltk.stem import PorterStemmer

p = PorterStemmer()

print(p.stem("actor"))

s = SnowballStemmer("english")

print(s.stem("actor"))

Output:

'actor'

'actor'

You can see that both of these modules return the same output, actor, not the root word act. Now let us try this with another stemmer called the LancasterStemmer.

from nltk.stem import LancasterStemmer

l = LancasterStemmer()

print(l.stem("actor"))

Output:

'act'

You can see that we get the desired output act this time.

You saw how different stemmers are giving different outputs with different words.

We can use various other stemmers, but since all of them are based on some algorithms, there are always chances of not getting the desired output. Also, these stemmers are very strict in chopping off the words based on the algorithm.

Also, stemming works mostly on the suffix part and is not smart enough to work out words by removing prefixes or infixes. Stemming algorithms do not even look up the meaning of words and the resulting roots.

Look at this example.

Here is a string of random letters with an ing at the end, and the stemmer removes this suffix and returns the output.

from nltk.stem import PorterStemmer

p = PorterStemmer()

print(p.stem("wkhfksafking"))

Output:

'wkhfksafk'

Therefore to solve this problem, a better way would be to use a lemmatizer. Let us look at the working of lemmatizers in detail.

Use of Lemmatizers Over Stemmers in NLTK in Python

Unlike stemmers, lemmatizers can morphologically analyze words and find the most appropriate lemma based on the context in which they are used. Note that a lemma is not the same as a stem since it is the base form of all its forms, unlike a stem.

Let us look at an example to see how lemmatizers are better than stemmers.

Here, we are using the PorterStemmer to stem several words.

from nltk.stem import PorterStemmer

p = PorterStemmer()

w = ["studies", "studying", "study"]

for i in w:

print(p.stem(i))

Output:

studi

studi

studi

You can see the output that we get is not very helpful. Let us go ahead and use a lemmatizer on the same set of words.

We first import the WordNetLemmatizer module and create an instance of it. Then, we use the lemmatize() function with a for loop to find out the lemma of each word.

from nltk.stem import WordNetLemmatizer

l = WordNetLemmatizer()

w = ["studies", "studying", "study"]

for i in w:

print(l.lemmatize(i))

Output:

study

studying

study

We do not get absurd outputs this time, and all the words make sense.

This is how using a lemmatizer is better than using a stemmer. For more information about how to compare strings in Python and the differences in stemming and lemmatization, refer to How to Compare Strings in Python.

Conclusion

This article taught us about stemmers and lemmatizers in NLTK in Python. We saw how and why stemmers give absurd results sometimes and how we can use lemmatizers to get better results.