How to Parse HTML Data in Python

-

Use the

BeautifulSoupModule to Parse HTML Data in Python -

Use the

PyQueryModule to Parse HTML Data in Python - Use the lxml Library to Parse HTML Data in Python

- Use the justext Library to Parse HTML Data in Python

- Use the EHP Module to Parse HTML Data in Python

- Conclusion

Through the emergence of web browsers, data all over the web is extensively available to absorb and use for various purposes. However, this HTML data is difficult to be injected programmatically in a raw manner.

We need to have some medium to parse the HTML script to be available programmatically. This article will provide the various ways we can parse HTML data quickly through Python methods/libraries.

Use the BeautifulSoup Module to Parse HTML Data in Python

Python offers the BeautifulSoup module to parse and pull essential data from the HTML and XML files.

This saves hours for every programmer by helping them navigate through the file structure to parse and fetch the data in a readable format from the HTML or marked-up structure.

The BeautifulSoup module accepts the HTML data/file or a web page URL as an input and returns the requested data using customized functions available within the module.

Let us look at some of the functions served by BeautifulSoup through the below example. We will be parsing the below HTML file (example.html) to extract some data.

<html>

<head>

<title>Heading 1111</title>

</head>

<body>

<p class="title"><b>Body</b></p>

<p class="Information">Introduction

<a href="http://demo.com" id="sync01">Amazing info!!</a>

<p> Stay tuned!!</p>

</body>

</html>

To use the functions available in the BeautifulSoup module, we need to install it using the below command.

pip install beautifulsoup4

Once done, we then pass the HTML file (example.html) to the module, as shown below.

from bs4 import BeautifulSoup

with open("example.html") as obj:

data = BeautifulSoup(obj, "html.parser")

The BeautifulSoup() function creates an object/pointer that points to the HTML file through the HTML.parser navigator. We can now use the pointer data (as seen in the above code) to traverse the website or the HTML file.

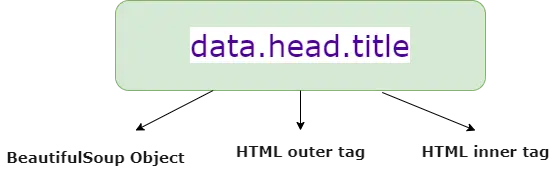

Let us understand the HTML tag component breakup through the below diagram.

We use object.html_outer_tag.html_inner_tag to extract the data within a specific HTML tag from the entire script or web page. With the BeautifulSoup module, we can even fetch data against individual HTML tags such as title, div, p, etc.

Let us try to extract the data against different HTML tags shown below in a complete code format.

from bs4 import BeautifulSoup

with open("example.html") as obj:

data = BeautifulSoup(obj, "html.parser")

print(data.head.title)

print(data.body.a.text)

We tried to extract the data enclosed within the <title> tag wrapped around the <head> as the outer tag with the above code. Thus, we point the BeautifulSoup object to that tag.

We can also extract the text associated with the <a> tag by pointing the BeautifulSoup object to its text section.

Output:

Heading 1111

Amazing info!!

Let us consider the below example to understand the parsing of HTML tags such as <div> through the BeautifulSoup object.

Consider the below HTML code.

<html>

<head>Heading 0000</head>

<body>

<div>Data</div>

</body>

</html>

If we wish to display or extract the information of the tag <div>, we need to formulate the code to help the BeautifulSoup object point to that specific tag for data parsing.

from bs4 import BeautifulSoup

with open("example.html") as obj:

data = BeautifulSoup(obj, "html.parser")

print(data.body.div)

Output:

<div>Data</div>

Thus, we can scrape web pages directly using this module. It interacts with the data over the web/HTML/XML page and fetches the essential customized data based on the tags.

Use the PyQuery Module to Parse HTML Data in Python

Python PyQuery module is a jQuery library that enables us to trigger jQuery functions against XML or HTML documents to easily parse through the XML or HTML scripts to extract meaningful data.

To use PyQuery, we need to install it using the below command.

pip install pyquery

The pyquery module offers us a PyQuery function that enables us to set a pointer to the HTML code for data extraction. It accepts the HTML snippet/file as an input and returns the pointer object to that file.

This object can further be used to point to the exact HTML tag whose content/text is to be parsed. Consider the below HTML snippet (demo.html).

<html>

<head>Heading 0000</head>

<body>

<div>Data</div>

</body>

</html>

We then import the PyQuery function from within the pyquery module. With the PyQuery function, we point an object to the demo.html file in a readable format.

Then, the object('html_tag').text() enables us to extract the text associated with any HTML tag.

from pyquery import PyQuery

data_html = open("demo.html", "r").read()

obj = PyQuery(data_html)

print(obj("head").text())

print(obj("div").text())

The obj('head') function points to the <head> tag of the HTML script, and the text() function enables us to retrieve the data bound to that tag.

Similarly, with obj('div').text(), we extract the text data bound to the <div> tag.

Output:

Heading 0000

Data

Use the lxml Library to Parse HTML Data in Python

Python offers us an lxml.html module to efficiently parse and deal with HTML data. The BeautifulSoup module also performs HTML parsing, but it turns out to be less effective when it comes to handling complex HTML scripts while scraping the web pages.

With the lxml.html module, we can parse the HTML data and extract the data values against a particular HTML tag using the parse() function. This function accepts the web URL or the HTML file as an input and associates a pointer to the root element of the HTML script with the getroot() function.

We can then use the same pointer with the cssselect(html_tag) function to display the content bound by the passed HTML tag. We will be parsing the below HTML script through the lxml.html module.

<html>

<head>Heading 0000</head>

<body>

<a>Information 00</a>

<div>Data</div>

<a>Information 01</a>

</body>

</html>

Let us have a look at the Python snippet below.

from lxml.html import parse

info = parse("example.html").getroot()

for x in info.cssselect("div"):

print(x.text_content())

for x in info.cssselect("a"):

print(x.text_content())

Here, we have associated object info with the HTML script (example.html) through the parse() function. Furthermore, we use cssselect() function to display the content bound with the <div> and <a> tags of the HTML script.

It displays all the data enclosed by the <a> and div tags.

Output:

Data

Information 00

Information 01

Use the justext Library to Parse HTML Data in Python

Python justext module lets us extract a more simplified form of text from within the HTML scripts. It helps us eliminate unnecessary content from the HTML scripts, headers, footers, navigation links, etc.

With the justext module, we can easily extract full-fledged text/sentences suitable for generating linguistic data sources. The justext() function accepts the web URL as an input, targets the content of the HTML script, and extracts the English statements/paragraphs/text out of it.

Consider the below example.

We have used the requests.get() function to do a GET call to the web URL passed to it. Once we point a pointer to the web page, we use the justext() function to parse the HTML data.

The justext() function accepts the web page pointer variable as an argument and parks it with the content function to fetch the web page content.

Moreover, it uses the get_stoplist() function to look for sentences of a particular language for parsing (English, in the below example).

import requests

import justext

link = requests.get("http://www.google.com")

data = justext.justext(link.content, justext.get_stoplist("English"))

for x in data:

print(x.text)

Output:

Search Images Maps Play YouTube News Gmail Drive More »

Web History | Settings | Sign in

Advanced search

Google offered in: हिन्दीবাংলাతెలుగుमराठीதமிழ்ગુજરાતીಕನ್ನಡമലയാളംਪੰਜਾਬੀ

Advertising Programs Business Solutions About Google Google.co.in

© 2022 - Privacy - Terms

Use the EHP Module to Parse HTML Data in Python

Having explored the different Python modules for parsing HTML data, fancy modules like BeautifulSoup and PyQuery do not function efficiently with huge or complex HTML scripts. To handle broken or complex HTML scripts, we can use the Python EHP module.

The learning curve of this module is pretty simple and is easy to adapt. The EHP module offers us the Html() function, which generates a pointer object and accepts the HTML script as an input.

To make this happen, we use the feed() function to feed the HTML data to the Html() function for identification and processing. Finally, the find() method enables us to parse and extract data associated with a specific tag passed to it as a parameter.

Have a look at the below example.

from ehp import *

script = """<html>

<head>Heading</head>

<body>

<div>

Hello!!!!

</div>

</body>

</html>

"""

obj = Html()

x = obj.feed(script)

for i in x.find("div"):

print(i.text())

Here, we have the HTML script in the script variable. We have fed the HTML script to the Html() method using the feed() function internally through object parsing.

We then tried to parse the HTML data and get the data against the <div> tag using the find() method.

Output:

Hello!!!!

Conclusion

This tutorial discussed the different approaches to parse HTML data using various Python in-built modules/libraries. We also saw the practical implementation of real-life examples to understand the process of HTML data parsing in Python.