How to Check if TensorFlow Is Using GPU

In the machine learning domain, it is often required to perform higher computations. In this regard, the default CPU cannot give the level of backup a GPU can.

When a system does not have GPU, there are platforms like Google Colab, Kaggle, etc.

Generally, TensorFlow uses a CPU, but the computation rate is almost 20x slower than GPU. So, we need our work to save time and perform with grit.

Whatever computer system you use, you need to ensure you have a CUDA-enabled GPU system. Specifically, you will require a GPU in your system to merge TensorFlow with it.

There are many solutions, and the most acceptable one is the docker container method that requires installations for CUDA Toolkit, cudNN, GPU drivers, etc. Mainly, Nvidia supplies specialized GPUs for machine learning; thus, most tutorials are Nvidia-oriented.

However, we will focus on a new addition in this field that successively works for most common GPU vendors such as - AMD, Nvidia, Intel, and Qualcomm. It is a low-level machine learning API named DirectML.

The API provides hardware-accelerated machine-learning primitive operators. In the following sections, we will examine how to install it in the local Anaconda environment and how it binds the TensorFlow with GPU.

Use DirectML to Enable TensorFlow to Use GPU

The task is simple and only needs a few lines of commands to operate. But to mention specifically, for the case of DirectML, only some specific versions of TensorFlow and python work.

It is recommended to use TensorFlow of version 1.15.5 and Python 3.6-3.7.

Initially, you can open the Anaconda prompt to write the commands or open the CMD.exe Prompt application from the Anaconda navigator. Next, we will create an environment to perform our task and install the necessary packages.

Let’s check the following commands.

conda create -n tfdml python=3.6

conda activate tfdml

pip install tensorflow-directml

After creating the tfdml environment, there will be a proceeding message and input y there. And then activate and install the DirecML.

Later, we will deactivate the environment and follow the commands below in the base environment.

conda deactivate

conda install -c conda-forge jupyterlab

conda install -c conda-forge nb_conda_kernels

Soon after installing these, we will again activate the tfdml environment and write the commands as follows.

conda activate tfdml

conda install ipykernel

ipython

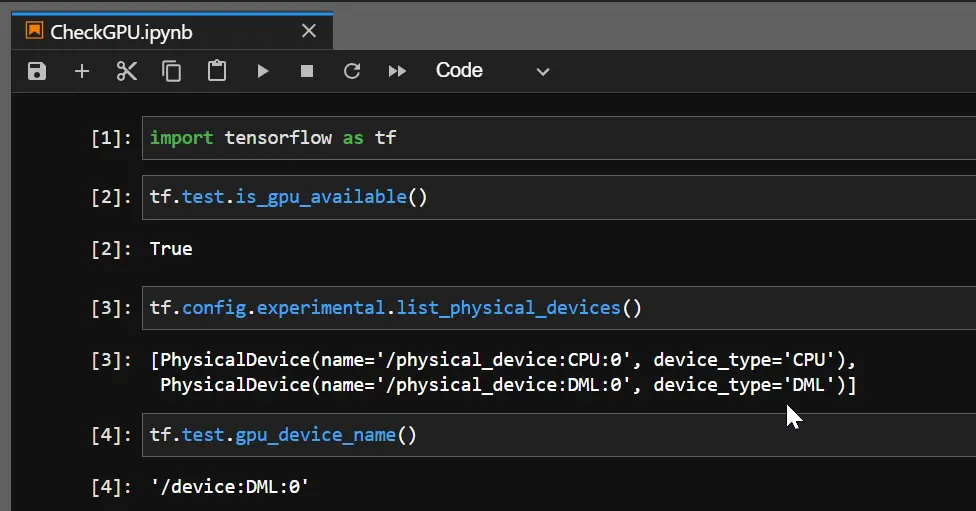

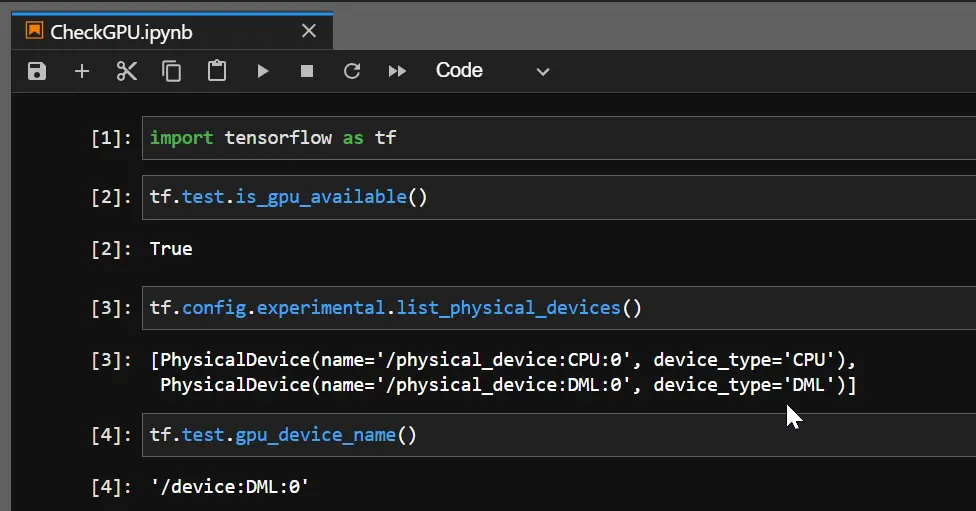

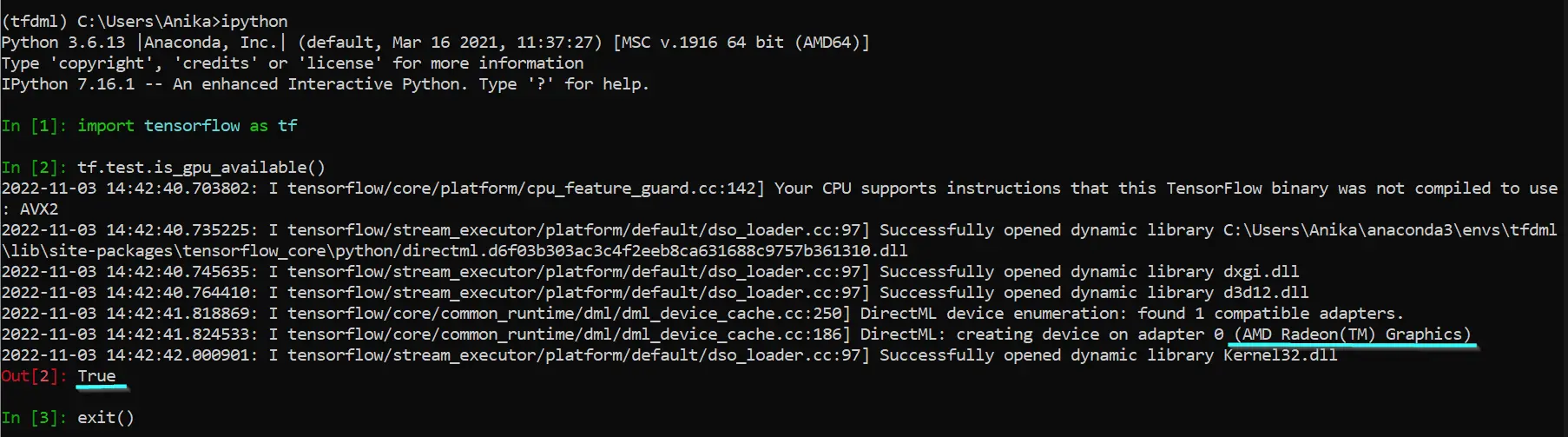

So, when we execute ipython, we enter the python shell, and here we will check if the DirectML device is created over our prebuilt GPU. When we get a True, our TensorFlow is now using the GPU.

import tensorflow as tf

tf.test.is_gpu_available()

Output:

As can be seen, the GPU AMD Radeon(TM) has a DirectML device over it, and thus, TensorFlow can use the GPU. And when you check the GPU device name, it will return as DML.