MapReduce Using Apache Spark

In this article, we will learn how to perform a MapReduce job using Apache Spark with the help of Scala programming language.

an Overview of MapReduce

MapReduce is a programming paradigm of Hadoop, and it is designed to process a huge amount of data in parallel. This processing or the whole work is done by dividing it into smaller chunks, also known as tasks.

Since this follows master-slave architecture, the master node assigns the task, and the slave nodes do the smaller jobs (tasks). These slaves (cluster of servers) process and give individual outputs.

MapReduce has two components one is a mapper, and another one is a reducer. In the first phase, the data is fed into the mapper, which converts the incoming data into key and value pairs.

The output generated by the mapper is often referred to as intermediate output. This output of the mapper is given as input to the reducer, which does the aggregation sort type of computation based on the key and gives the final pair of key-value, which is our final output.

Run MapReduce With Apache Spark

Though MapReduce is an important part of the Hadoop system, we can also run it using Spark. We’ll see a very well-known MapReduce program that uses word count code as an example to better understand it.

So the problem statement is that given a text file, we have to count the frequency of each word in it.

Pre-requisites:

These things should be installed in the system to run the below program.

- JDK 8

- Scala

- Hadoop

- Spark

We have to put the file in the HDFS file system and start the spark shell.

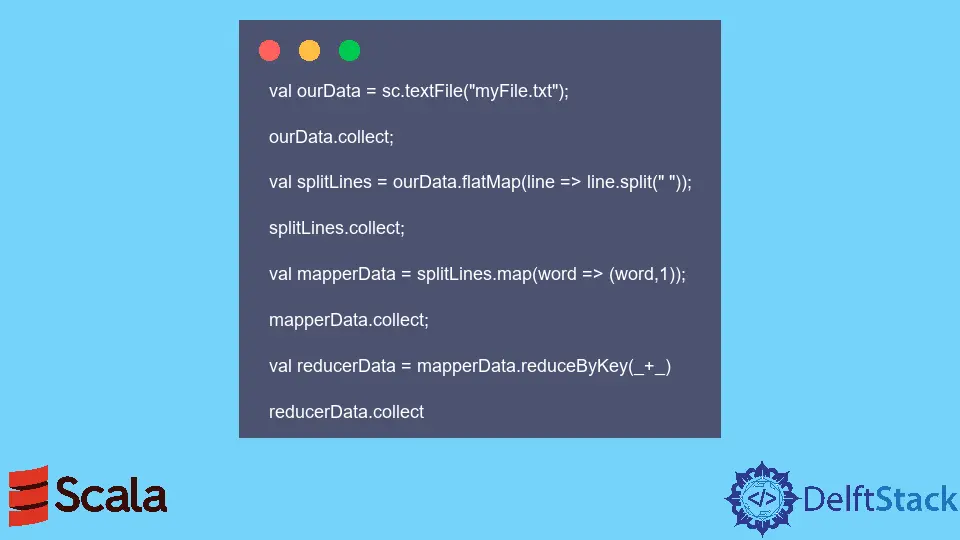

Example code: The below code was executed in the Spark shell.

val ourData = sc.textFile("myFile.txt");

ourData.collect;

val splitLines = ourData.flatMap(line => line.split(" "));

splitLines.collect;

val mapperData = splitLines.map(word => (word,1));

mapperData.collect;

val reducerData = mapperData.reduceByKey(_+_)

reducerData.collect

When the above code is executed, it gives the frequency of each word present in myfile.txt.