OpenCV Optical Flow

- Use Optical Flow to Detect Moving Objects in Videos in OpenCV

- Track the Motion of an Object in a Video in OpenCV

This tutorial will discuss detecting moving objects in videos using optical flow in OpenCV.

Use Optical Flow to Detect Moving Objects in Videos in OpenCV

Optical flow can detect moving objects present in a video in OpenCV. We can also detect the path of motion of an object using optical flow.

In optical flow, the position of an object is compared between two frames, and if the position of the object changes between frames, we can mark it as a moving object and highlight it using OpenCV. For example, we have a video where we want to highlight a moving object.

First of all, we need to get two frames from the video, one previous frame, and one next frame. We will use the calcOpticalFlowFarneback() function of OpenCV to find the objects moving in the video.

The calcOpticalFlowFarneback() function uses two frames and compares the position of objects in these frames, and if the position of an object changes, the function will save that object in a 2D array.

We can use the cartToPolar() and the 2D array returned by the calcOpticalFlowFarneback() to find the magnitude and angle of the objects present in the given video.

After that, we can draw different colors depending on the magnitude and angle of moving objects on a drawing to visualize the objects. For example, let’s use a video of a dog and highlight its motion.

See the code below.

import numpy as np

import cv2

cap_video = cv2.VideoCapture("bon_fire_dog_2.mp4")

ret, frame1 = cap_video.read()

prvs_frame = cv2.cvtColor(frame1, cv2.COLOR_BGR2GRAY)

hsv_drawing = np.zeros_like(frame1)

hsv_drawing[..., 1] = 255

while 1:

ret, frame2 = cap_video.read()

if not ret:

print("No frames available!")

break

next_frame = cv2.cvtColor(frame2, cv2.COLOR_BGR2GRAY)

flow_object = cv2.calcOpticalFlowFarneback(

prvs_frame, next_frame, None, 0.5, 3, 15, 3, 5, 1.2, 0

)

magnitude, angle = cv2.cartToPolar(flow_object[..., 0], flow_object[..., 1])

hsv_drawing[..., 0] = angle * 180 / np.pi / 2

hsv_drawing[..., 2] = cv2.normalize(magnitude, None, 0, 255, cv2.NORM_MINMAX)

bgr_drawing = cv2.cvtColor(hsv_drawing, cv2.COLOR_HSV2BGR)

cv2.imshow("frame2", bgr_drawing)

cv2.waitKey(10)

prvs_frame = next_frame

cv2.destroyAllWindows()

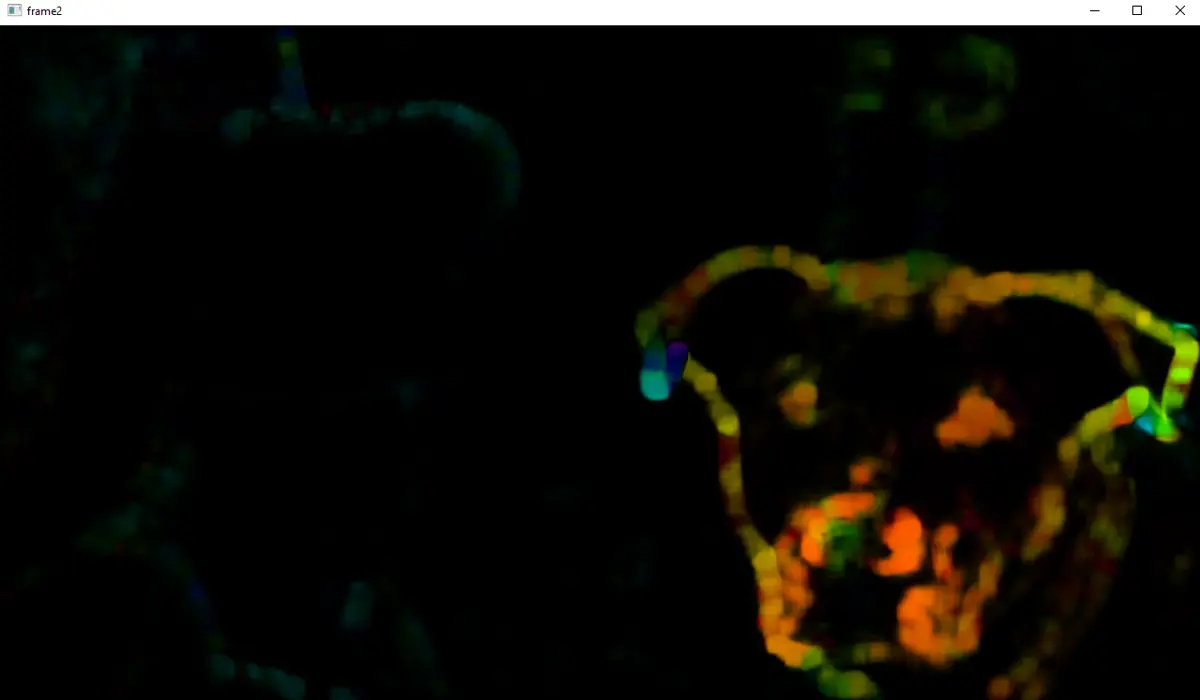

Output:

As you can see in the above output, the dog is marked with different colors because the dog is the only object moving in the video. In the above code, the cvtColor() function of OpenCV is used to convert the color frames of the video to grayscale.

The zeros_like() function creates a black drawing to show the moving object. The calcOpticalFlowFarneback() function finds the moving objects.

The first argument of the calcOpticalFlowFarneback() function is the first 8-bit single-channel image or the previous frame. The second argument is the second image or the next frame.

The third argument is the output array in which the flow objects will be saved. The fourth argument is the image scale used to build pyramids for the images.

The fifth argument defines the number of pyramid layers, and if we don’t want to use extra layers, we can set its value to 1. The sixth argument is that the averaging window size and its value defines the algorithm’s speed.

A smaller window size means the speed will be slow, but the output will be sharp. The seventh argument defines the number of iterations for the algorithm at each layer.

The eighth argument is used to set the size of the pixel neighborhood, which will be used to find the polynomial for each pixel. The ninth argument is used to set the standard deviation of polynomials, and the tenth argument is used to set the flags.

The normalize() function is used to normalize the magnitude of the moving objects using MINMAX normalization.

Track the Motion of an Object in a Video in OpenCV

We can also track the feature points present in the video which are moving.

For example, to track the moving position of the dog, we need to get some feature points and then track them. We can use the goodFeaturesToTrack() function of OpenCV to get the feature points.

After that, we need to pass these feature points along with the previous and next frames inside the calcOpticalFlowPyrLK() function to track the given points along with the video frames. The function will return the next point, status, and error.

We can use the output to draw the line and circle using the line() and circle() functions of OpenCV. After that, we can add the drawing to the original video using the add() function of OpenCV.

See the code below.

import numpy as np

import cv2

cap_video = cv2.VideoCapture("bon_fire_dog_2.mp4")

feature_parameters = dict(maxCorners=100, qualityLevel=0.3, minDistance=7, blockSize=7)

lk_parameters = dict(

winSize=(15, 15),

maxLevel=2,

criteria=(cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, 10, 0.03),

)

random_color = np.random.randint(0, 255, (100, 3))

ret, previous_frame = cap_video.read()

previous_gray = cv2.cvtColor(previous_frame, cv2.COLOR_BGR2GRAY)

p0_point = cv2.goodFeaturesToTrack(previous_gray, mask=None, **feature_parameters)

mask_drawing = np.zeros_like(previous_frame)

while 1:

ret, frame = cap_video.read()

if not ret:

print("No frames available!")

break

frame_gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

p1, st_d, error = cv2.calcOpticalFlowPyrLK(

previous_gray, frame_gray, p0_point, None, **lk_parameters

)

if p1 is not None:

good_new_point = p1[st_d == 1]

good_old_point = p0_point[st_d == 1]

for i, (new_point, old_point) in enumerate(zip(good_new_point, good_old_point)):

a, b = new_point.ravel()

c, d = old_point.ravel()

mask_drawing = cv2.line(

mask_drawing,

(int(a), int(b)),

(int(c), int(d)),

random_color[i].tolist(),

2,

)

frame = cv2.circle(frame, (int(a), int(b)), 5, random_color[i].tolist(), -1)

img = cv2.add(frame, mask_drawing)

cv2.imshow("frame", img)

cv2.waitKey(30)

previous_gray = frame_gray.copy()

p0_point = good_new_point.reshape(-1, 1, 2)

cv2.destroyAllWindows()

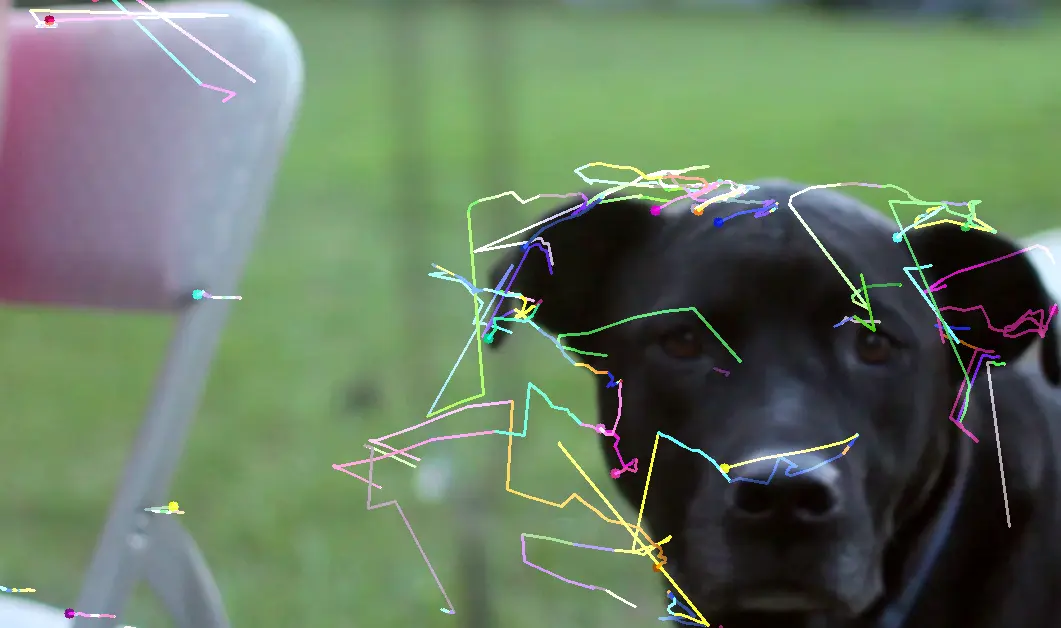

Output:

As you can see, the feature points are being tracked in the video. This algorithm is useful when we want to track the motion of an object in a video.

In the above code, the first argument of the goodFeaturesToTrack() function is the frame in which we want to track the features points. The second argument is the output which contains the corner points.

The third argument, maxCorners, sets the maximum corners. The fourth argument, minDistance, is used to set the quality level, and the fifth argument is used to set the minimum distance between points.

The sixth argument, mask, is used to set the portion of the frame from which the points will be extracted using a mask, and if we want to extract the points from the whole image, we can set the mask to none.

The seventh argument, blockSize, is used to set the block size, and the eighth argument is used to set the gradient size.

In the above code, we defined some properties using the dict() function and then passed them later in the code, but we can also define the properties inside the function.

The first argument of the calcOpticalFlowPyrLK() function is the first input image or the previous frame, and the second argument is the second image (or next frame).

The third argument is the previous input point, and the fourth is the next output point. The fifth argument, status, is the status, and the status of a point will be 1 if the flow of that point is found and it’s an output argument.

The sixth argument, err, is the vector of errors and an output argument. The seventh argument, winSize, is used to set the window size for each pyramid, and the eighth argument, maxLevel, is used to set the number of pyramids.

The last argument, criteria, is used to set the criteria for the algorithm.