Pandas SQLAlchemy

In this article, we will be going through the general definition of SQLAlchemy ORM, how it compares to a pandas DataFrame and how we can convert an SQLAlchemy ORM object to a pandas DataFrame. However, we assume you are already familiar with how a pandas DataFrame and a relational database are set up for this article.

SQLAlchemy ORM

SQLAlchemy is a module that helps enhance the communication experience between a python script and the database connected to the script. This module is typically used as an ORM or Object Relational Mapping tool.

An ORM essentially “translates” the classes in a python script. With ORMs, we can write simple SQL queries to perform database-related actions.

It is through an ORM that, in a python script, the classes can be converted into a SQL table, and the functions are converted to queries. Hence, SQLAlchemy is often referred to as a bridge between a python script and a relational database.

Convert an SQLAlchemy ORM to a DataFrame

These functions will read the SQL query or table into the dataframe object. The read_sql() function delegates the process to the read_sql_query() or the read_sql_table() functions, according to the parameters provided to the function.

Here are some of the parameters typically used in the read_sql() function.

| Parameters | Description |

|---|---|

table_name (string) |

As the name suggests, this is the name of the database table. |

con (string) |

Typically contains the data connection URI. |

schema (string) |

This parameter contains the database schema name that is supposed to be queried. If there is no schema for the database, we can set this parameter to default, the value for which is set to None. |

index_col (string/list of strings) |

These are the indexes or columns. These are optional, and their default value of None can be used. |

coerce_float (bool) |

This parameter converts non-string values to floating point values. The default for this parameter is set to be True. |

parse_dates (list/dict) |

These are the list of column names that can be parsed as dates. Their default value is set to None. |

columns (list) |

This parameter is a list of column names to be selected from a database table. The default value for this parameter is set to None. |

chunksize (int) |

This parameter is the number of rows for each chunk. It is typically used to enter a large amount of data simultaneously. If it is not required to be set, we can set it to the default value, which is set to None. |

dtype (datatype name) |

This parameter can be a simple datatype name, int, bool, etc., or a dictionary of columns with their datatypes specified. |

The syntax for all these methods:

pandas.read_sql_query(

sql,

con,

index_col=None,

coerce_float=True,

params=None,

parse_dates=None,

chunksize=None,

dtype=None,

)

Typically, in Python scripts, we use this much more straightforward and simplified way to read into an SQL database:

data_frame_obj = pd.read_sql(query.statement, db_engine)

This line of code above will read the query defined in our python script and read it into our Dataframe object.

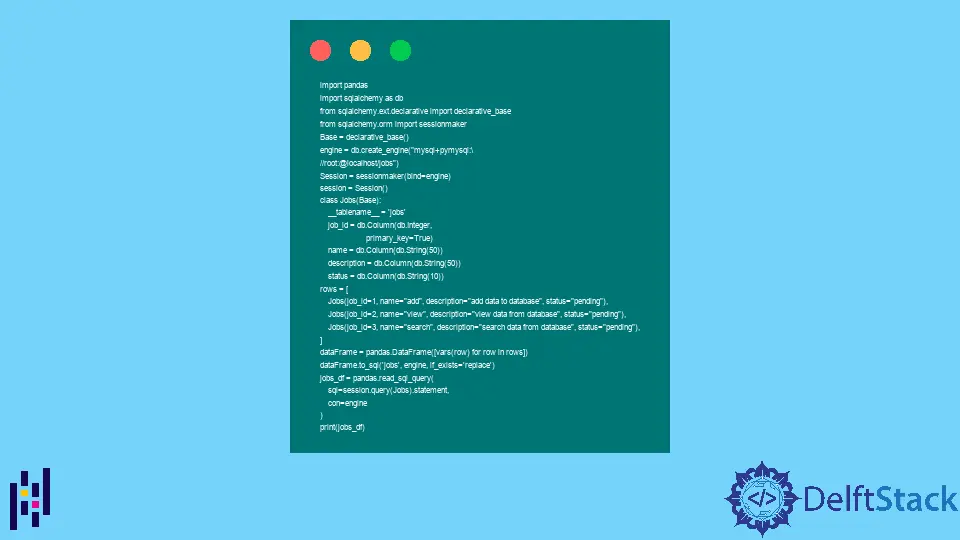

Let’s look at this example script to better understand how we can apply this.

import pandas

import sqlalchemy as db

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy.orm import sessionmaker

Base = declarative_base()

engine = db.create_engine(

"mysql+pymysql:\

//root:@localhost/jobs"

)

Session = sessionmaker(bind=engine)

session = Session()

class Jobs(Base):

__tablename__ = "jobs"

job_id = db.Column(db.Integer, primary_key=True)

name = db.Column(db.String(50))

description = db.Column(db.String(50))

status = db.Column(db.String(10))

rows = [

Jobs(job_id=1, name="add", description="add data to database", status="pending"),

Jobs(

job_id=2, name="view", description="view data from database", status="pending"

),

Jobs(

job_id=3,

name="search",

description="search data from database",

status="pending",

),

]

dataFrame = pandas.DataFrame([vars(row) for row in rows])

dataFrame.to_sql("jobs", engine, if_exists="replace")

jobs_df = pandas.read_sql_query(sql=session.query(Jobs).statement, con=engine)

print(jobs_df)

To run this script successfully, you must make sure that you have the following libraries installed.

- Pandas

- SQLAlchemy

- PyMySQL

- Docker

Once you have all these libraries set up, run the following command in the terminal of your choice to set up your docker:

docker run --name mysql-db -e MYSQL_ALLOW_EMPTY_PASSWORD=yes -p 3306:3306 -d mysql:5.6

Next, we can enter our container with this command to create the database:

docker exec -it mysql-db mysql

We run the create database query like so:

create database jobs;

Now that our environment is set up, we can exit the container and run our python script to get the following results.

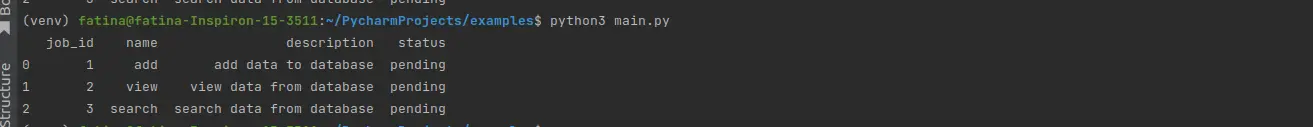

Output:

This means we have successfully converted our SQLAlchemy ORM to a Pandas Dataframe.

I am Fariba Laiq from Pakistan. An android app developer, technical content writer, and coding instructor. Writing has always been one of my passions. I love to learn, implement and convey my knowledge to others.

LinkedIn