How to Parse Json in Node.js

In this section, we will learn to parse JSON synchronously in Node js. Parsing JSON data may be referred to as the process of interpreting JSON data in a specific language that you may be currently using.

JSON data is often stored in key-value pairs as string values enclosed under quotation marks while still following the JSON specifications. JSON data received from the server or returned by an API is often in string format. In this raw format, JSON data is not easily readable and accessible and thus needs to be converted into a more user-friendly form.

There are three major ways that we can use to parse JSON files in Node js. For local files, i.e., those that reside in your local computer storage, we can use the require() function used to load modules into the Node js environment. However, this method is synchronous, meaning that this program will not execute anything else until the current file has been fully parsed.

[

{

"id": 0,

"name": "Maribel Andrews"

},

{

"id": 1,

"name": "Gilliam Mcdonald"

},

{

"id": 2,

"name": "Lisa Wright"

}

]

const friends = require("./friends.json");

console.log(friends);

Sample output:

[

{ id: 0, name: 'Maribel Andrews' },

{ id: 1, name: 'Gilliam Mcdonald' },

{ id: 2, name: 'Lisa Wright' }

]

This program is not suitable for working with dynamic programs but is with static files. Programs that may change the data would require us to parse the data all over again once any change has been made. This is not the best way of parsing JSON files.

Alternatively, we could use the fs module and the fs.readFileSync() to load the JSON file instead of loading it as a module. The fs module is a more efficient way of performing file operations such as reading files, renaming, and deleting. The fs.readFileSync() is an interface that allows us to read the file and its contents synchronously.

[

{

"id": 0,

"name": "Maribel Andrews"

},

{

"id": 1,

"name": "Gilliam Mcdonald"

},

{

"id": 2,

"name": "Lisa Wright"

}

]

const fs = require("fs");

const friends = fs.readFileSync("./friends.json");

console.log(friends);

Sample output:

<Buffer 5b 0d 0a 20 20 20 20 7b 0d 0a 20 20 20 20 20 20 22 69 64 22 3a 20 30 2c 0d 0a 20 20 20 20 20 20 22 6e 61 6d 65 22 3a 20 22 4d 61 72 69 62 65 6c 20 41 ... 144 more bytes>

While we have successfully loaded the JSON file, we have not specified the encoding format. The readFileSync() method returns the raw buffer when the encoding parameter is not specified i.e when null. The encoding format of text files is usually utf-8.

const fs = require("fs");

const friends = fs.readFileSync("./friends.json", {encoding: 'utf-8'});

console.log(friends);

Sample output:

[

{

"id": 0,

"name": "Maribel Andrews"

},

{

"id": 1,

"name": "Gilliam Mcdonald"

},

{

"id": 2,

"name": "Lisa Wright"

}

]

Now that we have successfully read the JSON file, we can parse the JSON string into a JSON object. We can do so using the JSON.parse() function. This is a javascript function that we can use to convert the JSON string into an object.

This function accepts two parameters: the JSON string to be parsed and an optional parameter known as the reviver. According to mozilla.org, if the reviver parameter is a function, it specifies how the value originally parsed is transformed.

const fs = require("fs");

const friends = fs.readFileSync("./friends.json", {encoding: 'utf-8'});

const parsed_result = JSON.parse(friends);

console.log(parsed_result);

Output:

[

{ id: 0, name: 'Maribel Andrews' },

{ id: 1, name: 'Gilliam Mcdonald' },

{ id: 2, name: 'Lisa Wright' }

]

In this section, we will see the way to parse a JSON string asynchronously. In the previous examples, we have looked at how we can parse JSON string data synchronously. However, in Node js, writing synchronous functions is considered bad, especially if the data is large.

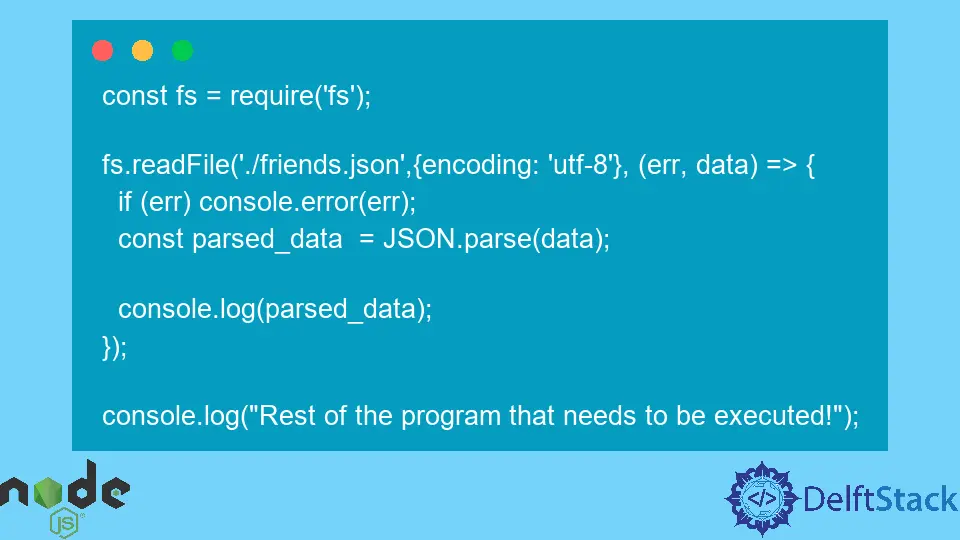

We can parse JSON string data into a JSON object using the method fs.readFile() and call back functions.

It will guarantee that the rest of the program will be executed while the data is fetched in the background reducing the unnecessary waiting time for the data to be loaded.

const fs = require('fs');

fs.readFile('./friends.json',{encoding: 'utf-8'}, (err, data) => {

if (err) console.error(err);

const parsed_data = JSON.parse(data);

console.log(parsed_data);

});

console.log("Rest of the program that needs to be executed!");

Sample output:

Rest of the program that needs to be executed!

[

{ id: 0, name: 'Maribel Andrews' },

{ id: 1, name: 'Gilliam Mcdonald' },

{ id: 2, name: 'Lisa Wright' }

]

While this method may seem like the better option when working with huge data than the previously discussed synchronous methods, it is not. When we have data from an external source, we constantly need to reload the data again when new changes have been made; this is the same scenario when working with the synchronous methods. The native Node js stream APIS is the recommended alternative when working with huge external data.

Isaac Tony is a professional software developer and technical writer fascinated by Tech and productivity. He helps large technical organizations communicate their message clearly through writing.

LinkedIn