How to Remove Duplicate Lines in Bash

-

Use

sortanduniqto Remove Duplicate Lines in Bash -

Use the

awkCommand to Remove Duplicate Lines in Bash

Duplicate entries can cause various problems in a Bash script, such as incorrect or inconsistent results, and they can also make the script hard to maintain. Removing duplicate entries from the script is often necessary to avoid these problems, and there are numerous ways to do this in Bash.

Use sort and uniq to Remove Duplicate Lines in Bash

One approach for removing duplicate entries in a Bash script is to use the sort and uniq commands. The sort command sorts the input data in a specified order, and the uniq command filters out duplicate lines from the sorted data.

The data.txt file contains the content below for the examples in this article.

arg1

arg2

arg3

arg2

arg2

arg1

To remove duplicate entries from the file above, you can use the following command:

sort data.txt | uniq > data-unique.txt

Output (touch data-unique.txt):

arg1

arg2

arg3

This command sorts the data.txt file in ascending order (by default) and pipes the output to the uniq command. The uniq command filters out duplicate lines from the sorted data and writes the result to a new file called data-unique.txt.

This will remove all duplicate entries from the data.txt file and create a new file with unique entries.

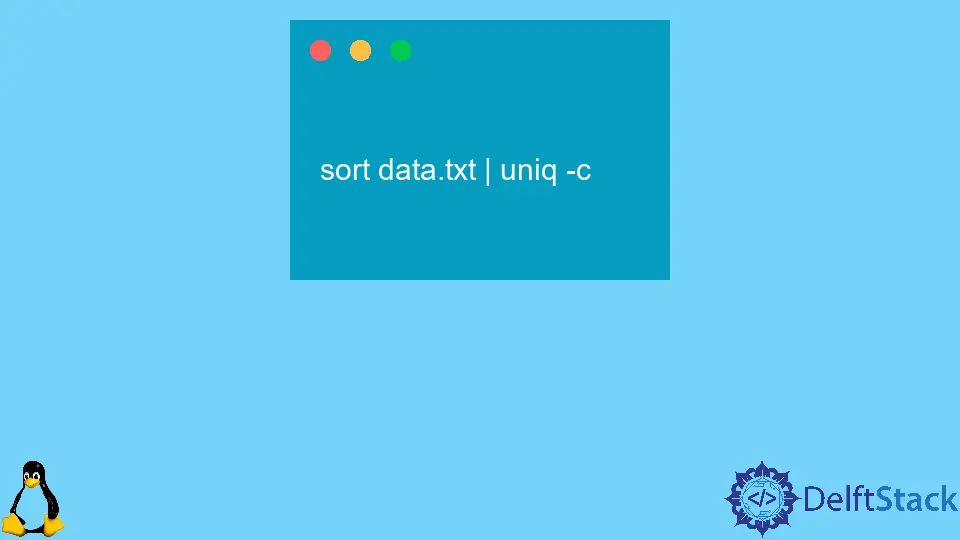

The uniq command has several options that can be used to control its behavior, such as the -d option to only print duplicate lines, or the -c option to print a count of the number of times each line appears in the input. For example, to print the number of times each line appears in the data.txt file, you can use the following command:

sort data.txt | uniq -c

This command is similar to the previous one but adds the -c option to the uniq command. This will print the count of the number of times each line appears in the input and the line itself.

For example, the result might look something like this:

2 arg1

3 arg2

1 arg3

This output shows that Line 1 appears.

Use the awk Command to Remove Duplicate Lines in Bash

Another approach for removing duplicate entries in a Bash script is to use the awk command, a powerful text processing tool that can execute numerous operations on text files. The awk command has a built-in associative array data structure, which can store and count the occurrences of each line in the input.

For example, to remove duplicate entries from the same file as earlier, you can use the following command:

awk '!a[$0]++' data.txt > data-unique.txt

Output:

arg1

arg2

arg3

This command uses the awk command to read the data.txt file and applies a simple condition to each input line. The condition uses the !a[$0]++ expression, which increments the value of the a array for each line read.

This effectively counts the number of times each line appears in the input and stores the count in the a array.

The awk command then applies the ! operator to the a[$0] expression, which negates the array element’s value. This means that only lines with a count of 0 in the a array will pass the condition and be printed to the output. The output is then redirected to a new file called data-unique.txt, containing unique entries from the data.txt file.

The awk command also provides several options and features that can be used to control its behavior and customize its output. For example, you can use the -F option to specify a different field separator or the -v option to define a variable in the script.

You can also use the printf function to format the output of the awk command in various ways.

The sort and uniq commands are simple and efficient tools for removing duplicate entries, and the awk command provides more advanced features and options for customizing the output and behavior of the script.

Olorunfemi is a lover of technology and computers. In addition, I write technology and coding content for developers and hobbyists. When not working, I learn to design, among other things.

LinkedIn