How to Pool Map With Multiple Arguments in Python

-

Parallel Function Execution Using the

pool.map()Method -

Parallel Function Execution With Multiple Arguments Using the

pool.starmap()Method -

Parallel Function Execution With Multiple Arguments Using the

Pool.apply_async()Method - Conclusion

This article will explain different methods to perform parallel function execution using the multiprocessing module in Python.

The multiprocessing module provides the functionalities to perform parallel function execution with multiple inputs and distribute input data across different processes.

We can parallelize the function’s execution with different input values by using the following methods in Python.

Parallel Function Execution Using the pool.map() Method

The pool.map(function, iterable) method returns an iterator that applies the function provided as input to each item of the input iterable. Therefore, if we want to perform parallel execution of the function with different inputs, we can use the pool.map() method.

The below example code demonstrates how to use the pool.map() method to parallelize the function execution in Python.

from multiprocessing import Pool

def myfunc(x):

return 5 + x

if __name__ == "__main__":

with Pool(3) as p:

print(p.map(myfunc, [1, 2, 3]))

Here, myfunc is a simple function that takes a single argument x and returns the result of 5 + x. This function is designed to be easily parallelizable, as it operates independently on its input.

We then create a context manager with with Pool(3) as p:, initializing a pool of three worker processes managed by Pool. This setup allows for automatic closure and cleanup of the pool once we exit the block.

The parallel processing is carried out by p.map(myfunc, [1, 2, 3]), where we use the map function of the Pool object, akin to Python’s built-in map. This function applies myfunc to each element in the iterable [1, 2, 3], with each application executed in parallel across the pool’s worker processes.

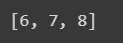

Output:

If the input function has multiple arguments, we can execute the function in parallel using the pool.map() method and partial() function with it.

The below example demonstrates how to parallelize the function execution with multiple arguments using the pool.map() in Python.

import multiprocessing

from functools import partial

# Example function with three arguments

def multiply(x, y, z):

return x * y * z

# Main execution block

if __name__ == "__main__":

# Creating a pool of workers

with multiprocessing.Pool() as pool:

# Freezing the first argument of the function 'multiply' to 10

partial_multiply = partial(multiply, 10)

# List of tuples, each containing two arguments

args_list = [(2, 3), (4, 5), (6, 7)]

# Mapping the partial function across the pool

results = pool.starmap(partial_multiply, args_list)

# Displaying the results

print("Results:", results)

In this example, we have defined a function multiply(x, y, z) that simply multiplies its three arguments.

Our goal is to execute this function in parallel for various combinations of y and z, while keeping x constant. To achieve this, we utilize functools.partial().

We create the partial_multiply object using partial(multiply, 10). Here, multiply is the target function, and 10 is the fixed value for the first argument x.

This partial object behaves like the multiply function where x is always 10.

Our args_list contains tuples, each representing a pair of arguments (y and z) for which the multiply function needs to be executed. Notice that we don’t need to specify the first argument (x) as it’s already set in partial_multiply.

We then use the multiprocessing.Pool to create a pool of worker processes. With pool.starmap(), we map the partial_multiply function over args_list.

The starmap method is essential here, allowing the elements of args_list to be unpacked into the function arguments.

Finally, we print out the results. Each element in results corresponds to the product of 10 (our fixed x), and the respective elements in args_list.

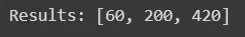

Output:

As can be noticed in the above example, the shortcoming of this method is that we can not change the value of the first argument.

Parallel Function Execution With Multiple Arguments Using the pool.starmap() Method

If we want to execute a function parallel with multiple arguments, we can do so using the pool.starmap(function, iterable) method.

Like the pool.map(function, iterable) method, the pool.starmap(function, iterable) method returns an iterator that applies the function provided as input to each item of the iterable. Still, it expects each input item iterable to be arranged as input function argument iterables.

By using the pool.starmap() method we can provide different values to all arguments of the function, unlike the pool.map() method.

We can perform parallel function execution with multiple arguments in Python using the pool.starmap() method in the following way.

import multiprocessing

# A sample function that takes multiple arguments

def calculate_area(length, width):

return length * width

# Main execution block

if __name__ == "__main__":

# Define a list of tuples for arguments

rectangle_dimensions = [(1, 2), (3, 4), (5, 6)]

# Create a pool of worker processes

with multiprocessing.Pool() as pool:

# Use starmap to apply 'calculate_area' across the list of tuples

areas = pool.starmap(calculate_area, rectangle_dimensions)

# Print the results

print("Areas:", areas)

In our example, we define a function calculate_area(length, width) to calculate the area of a rectangle. We intend to use this function across a list of rectangle dimensions, represented as tuples (length, width).

We then create a pool of worker processes using with multiprocessing.Pool() as pool:. This context manager ensures that the pool is properly managed (e.g., closed) after its block of code is executed.

Inside this block, we call pool.starmap(calculate_area, rectangle_dimensions). The calculate_area function is our target function, and rectangle_dimensions is an iterable of tuples, where each tuple contains a pair of length and width for a rectangle.

What starmap() does here is essentially unpack each tuple from rectangle_dimensions and pass them as arguments to calculate_area. For instance, the first tuple (1, 2) is unpacked and passed as calculate_area(1, 2).

This operation is performed in parallel across different processes in the pool, making it highly efficient for large datasets or computationally intensive tasks.

Finally, we print the results stored in areas. Each element in areas is the area of a rectangle corresponding to the respective tuple in rectangle_dimensions.

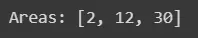

Output:

Parallel Function Execution With Multiple Arguments Using the Pool.apply_async() Method

In Python’s multiprocessing landscape, Pool.apply_async() offers a flexible way to execute functions asynchronously in parallel processes. This method is particularly useful for functions that require multiple arguments, allowing each process to handle complex computations independently and concurrently.

The apply_async() method from the multiprocessing.Pool class is designed to apply a function asynchronously to a pool of worker processes. This method is ideal for tasks that require non-blocking computations and where each task might take a variable amount of time to complete.

import multiprocessing

# Function to compute the power of a number

def power(base, exponent):

return base**exponent

# Main execution block

if __name__ == "__main__":

# Create a pool of worker processes

with multiprocessing.Pool() as pool:

# List of arguments

args_list = [(2, 3), (4, 2), (3, 3), (5, 2)]

# List to store the result objects

results = [pool.apply_async(power, args) for args in args_list]

# Retrieve and print the results

for res in results:

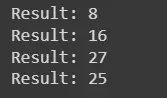

print("Result:", res.get())

In our code, we define a function power(base, exponent) that calculates the power of a number. To handle this function concurrently across multiple processes, we utilize the apply_async() method from Python’s multiprocessing module.

We start by creating a pool of worker processes using the context manager with multiprocessing.Pool() as pool:. This setup allows us to manage the pool efficiently, ensuring that resources are properly allocated and freed up after use.

Inside this context manager, we loop over our args_list, which contains tuples of arguments to be passed to the power function. Each tuple represents a distinct set of parameters for which we want to calculate the power.

For each tuple in args_list, we call pool.apply_async(power, args). It returns a result object immediately, without waiting for the function to complete.

We collect these result objects in the results list. Each result object is a placeholder for the eventual output of the power function.

By using apply_async(), we effectively distribute the computation of power across multiple processes.

Output:

Conclusion

In this comprehensive exploration, we delved into Python’s multiprocessing module, uncovering methods for parallel function execution. The pool.map() method showcased parallelization with single-argument functions, while pool.starmap() demonstrated handling multiple arguments efficiently.

For asynchronous and non-blocking tasks, we examined the versatility of Pool.apply_async(). Through practical code examples, we illustrated the utilization of these methods, catering to both novices and seasoned developers.

The presented techniques empower Python programmers to harness the potential of multi-core processors, optimizing code for enhanced performance. By mastering these methods, developers can seamlessly integrate parallel processing into their applications, unlocking efficiency gains and maximizing computing resources.