How to Use the std::mutex Synchronization Primitive in C++

This article will demonstrate how to use the std::mutex synchronization primitive in C++.

Use std::mutex to Protect Access to Shared Data Between Threads in C++

Generally, synchronization primitives are tools for the programmer to safely control access to shared data in programs that utilize concurrency.

Since unordered modification of shared memory locations from multiple threads yields erroneous results and unpredictable program behavior, it’s up to the programmer to guarantee that the program runs in a deterministic manner. Synchronization and other topics in concurrent programming are quite complex and often require extensive knowledge on multiple layers of software and hardware characteristics in modern computing systems.

Thus, we will assume some previous knowledge of these concepts while covering a very small part of the synchronization topic in this article. Namely, we will introduce the concept of mutual exclusion, also known as mutex (Often, the same name is given to the object names in programming languages e.g. std::mutex).

A mutex is a type of locking mechanism that can surround a critical section in the program and ensure that access to it is protected. When we say that the shared resource is protected, it means that if one thread is performing the write operation on the shared object, other threads won’t operate until the former thread finishes operation.

Notice that behavior like this might not be optimal for some problems as resource contention, resource starvation, or other performance-related issues may arise. Thus, some other mechanisms address these issues and offer different characteristics beyond the scope of this article.

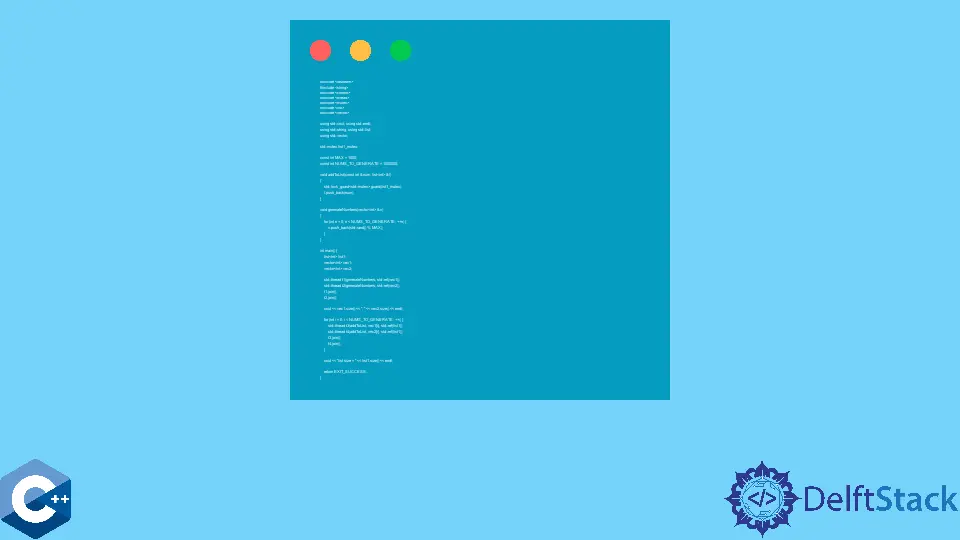

In the following example, we showcase the basic usage of the std::mutex class as provided by the C++ STL. Note that the standard support for threading has been added since the C++11 version.

At first, we need to construct a std::mutex object which then can be used to control the access to the shared resource. std::mutex has two core member functions - lock and unlock. The lock operation is usually called before the shared resource is modified and unlock is called after the modification.

The code that is inserted between these calls is known as the critical section. Even though the previous order of code layout is correct, C++ provides another useful template class - std::lock_guard, which can automatically unlock the given mutex when the scope is left. The main reason to utilize lock_guard instead of directly using the lock and unlock member functions is to guarantee that the mutex will be unlocked in all code paths even if the exceptions are raised. So, our code example too will use the latter method to demonstrate the usage of std::mutex.

The main program is constructed to create two vector containers of random integers and then push contents of both to a list. The tricky part is that we want to utilize multiple threads to add elements into the list.

Actually, we invoke the generateNumbers function with two threads, but these operate on different objects, and synchronization is unnecessary. Once we have generated integers, the list can be filled by calling the addToList function.

Note that this function starts with the lock_guard construction and then includes the operations that need to be conducted on the list. In this case, it only calls the push_back function on the given list object.

#include <chrono>

#include <iostream>

#include <list>

#include <mutex>

#include <string>

#include <thread>

#include <vector>

using std::cout;

using std::endl;

using std::list;

using std::string;

using std::vector;

std::mutex list1_mutex;

const int MAX = 1000;

const int NUMS_TO_GENERATE = 1000000;

void addToList(const int &num, list<int> &l) {

std::lock_guard<std::mutex> guard(list1_mutex);

l.push_back(num);

}

void generateNumbers(vector<int> &v) {

for (int n = 0; n < NUMS_TO_GENERATE; ++n) {

v.push_back(std::rand() % MAX);

}

}

int main() {

list<int> list1;

vector<int> vec1;

vector<int> vec2;

std::thread t1(generateNumbers, std::ref(vec1));

std::thread t2(generateNumbers, std::ref(vec2));

t1.join();

t2.join();

cout << vec1.size() << ", " << vec2.size() << endl;

for (int i = 0; i < NUMS_TO_GENERATE; ++i) {

std::thread t3(addToList, vec1[i], std::ref(list1));

std::thread t4(addToList, vec2[i], std::ref(list1));

t3.join();

t4.join();

}

cout << "list size = " << list1.size() << endl;

return EXIT_SUCCESS;

}

Output:

1000000, 1000000

list size = 2000000

In the previous code snippet, we chose to create two separate threads in every iteration of the for loop and join them to the main thread in the same cycle. This scenario is inefficient as it takes precious execution time to create and destroy threads, but we only offer it to demonstrate the mutex usage.

Usually, if there is a need to manage multiple threads during some arbitrary time in the program, the concept of thread pool is utilized. The simplest form of this concept will create a fixed number of threads at the start of the work routine and then will start assigning them units of work in a queue-like manner. When one thread completes its work unit, it can be reused for the next pending work unit. Mind though, our driver code is only designed to be a simple simulation of multi-threaded workflow in a program.

Founder of DelftStack.com. Jinku has worked in the robotics and automotive industries for over 8 years. He sharpened his coding skills when he needed to do the automatic testing, data collection from remote servers and report creation from the endurance test. He is from an electrical/electronics engineering background but has expanded his interest to embedded electronics, embedded programming and front-/back-end programming.

LinkedIn Facebook